Thinking models

aka systematic processes, empirical methods, experimental techniques, and probably a few more. As far as I've been able to research – and I didn't start wondering what others were calling them as a group until I went to title this page, and I've been thinking about them together for a while as a truth-honing endeavor – there isn't a category for them. Epistemology might come close, but my understanding of epistemology is that it's mostly looking backwards to test the information trail, less about building forward.

This page title is likely to change. I suggest orienting to it through the landing page.

It's also a reminder: there's always something more to learn, even when you've plumbed depths. ;)

Humanity knows that information gets skewed, and we have for a long time. We have developed several patterns of looking at and testing information so we can get it better aligned with reality, over time.

All of these models have embedded in them the scent of humanity's inconsistent and evolving grasp of reality. All of them use information literacy mechanics as a baseline.

- Critical thinking is about trying to suss out information inconsistencies and gaps. It's taking a step back and looking at logic and thinking as a domain in which a particular set of information is built.

- Triangulation is about adding different perspectives, and setting up an environment for patterns to form bottom-up. Basically: that people know their experience, and insights can develop if existing information structures are set aside so they can stop telling them who/what they are.

- Scientific method leans in on replicability. It assumes there is cognitive bias, even in working groups; and potentially unknown environmental factors that could be skewing the process.

- Humanism as a thinking model is, I think, emerging; I'll get deeper into that in it's standalone summation. If we decide to care about humanity, we can see the malformed information systems we've devised earlier – far before we make the planet inhospitable to current species. We take care of the big picture by caring about each other, reframing people's systemic-induced pain as early warning bells.

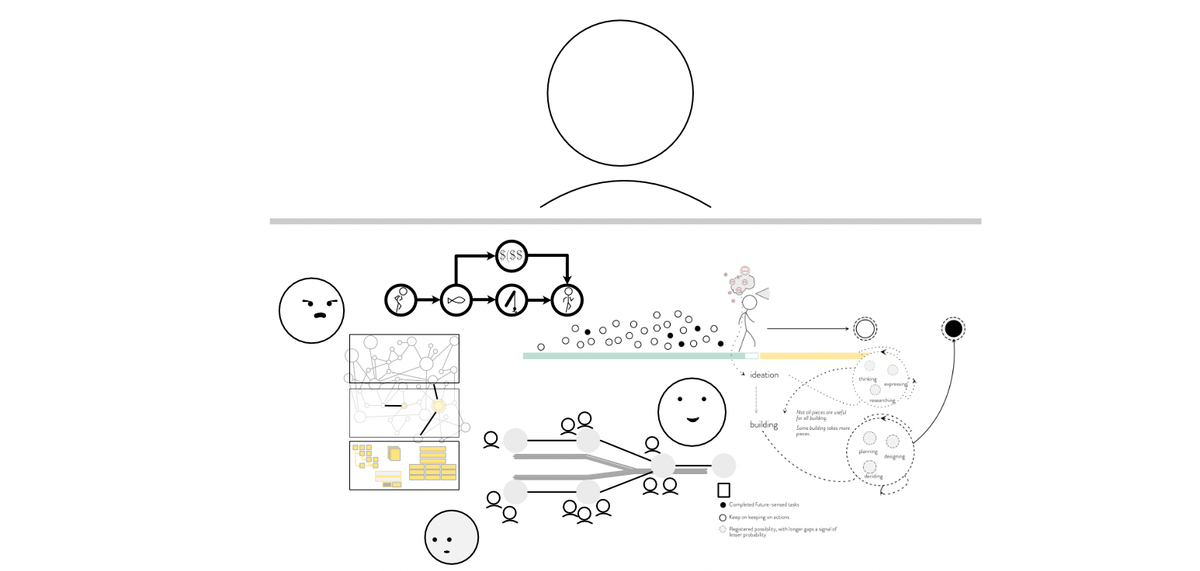

These models are interleaved in research and sense making, using what's most applicable to the information at hand. Information is complex. How we think it through is equally complex.

What's tacit, and only barely nodded to in the above descriptions, is that these thinking models are all including accountability, with accountability as a potential information skew.

The brain(s) parsing information is a potential information skew

^ Thats massive.

That's a shift away from:

- public, GED education standards, that assumes the authority line of teacher/school system/credentials, and sometimes state/federal;

- trusting experts just because they have a degree;

- attributing source(s) primarily when the information presented is bad, tacitly saying, "it's not my problem (chatGPT made me do it)";

- assuming hierarchy as the only option for both information transmission and inter-behavioral structures (culture, government, society);

- if we think really hard, it can also shift the assumption that to tear down a bit of what an expert says, is to be able to cloak yourself in their total expertise and authority.

Here's the bonus part: the more people thinking, from all their perspectives and with information literacy, can build information together that is closer to functional reality. That's why we have these models. We have a cleaner, more useful, more functional bead on reality if we work together. Hoo, darling. That's worth repeating.

We have a cleaner, more useful, more functional bead on reality if we work together.

That's alot to think about. :) My suggestion: take a break, let it soak in. Do what you do to help you think: take a walk? Stare at the ceiling/wall? Talk with someone you trust? You do you, just make sure it's being folded in rather than set aside.